Gesture Recognizers

Note: This chapter contains information that used to be in iPad Programming Guide. The information in this chapter has not been updated specifically for iOS 4.0.

Applications for iOS are driven largely through events generated when users touch buttons, toolbars, table-view rows and other objects in an application’s user interface. The classes of the UIKit framework provide default event-handling behavior for most of these objects. However, some applications, primarily those with custom views, have to do their own event handling. They have to analyze the stream of touch objects in a multitouch sequence and determine the intention of the user.

Most event-handling views seek to detect common gestures that users make on their surface—things such as triple-tap, touch-and-hold (also called long press), pinching, and rotating gestures, The code for examining a raw stream of multitouch events and detecting one or more gestures is often complex. Prior to iOS 3.2, you cannot reuse the code except by copying it to another project and modifying it appropriately.

To help applications detect gestures, iOS 3.2 introduces gesture recognizers, objects that inherit directly from the UIGestureRecognizer class. The following sections tell you about how these objects work, how to use them, and how to create custom gesture recognizers that you can reuse among your applications.

Note: For an overview of multitouch events on iOS, see ““Document Revision History”” in iOS Application Programming Guide.

Gesture Recognizers Simplify Event Handling

UIGestureRecognizer is the abstract base class for concrete gesture-recognizer subclasses (or, simply, gesture recognizers). The UIGestureRecognizer class defines a programmatic interface and implements the behavioral underpinnings for gesture recognition. The UIKit framework provides six gesture recognizers for the most common gestures. For other gestures, you can design and implement your own gesture recognizer (see “Creating Custom Gesture Recognizers” for details).

Recognized Gestures

The UIKit framework supports the recognition of the gestures listed in Table 3-1. Each of the listed classes is a direct subclass of UIGestureRecognizer.

Gesture | UIKit class |

|---|---|

Tapping (any number of taps) | |

Pinching in and out (for zooming a view) | |

Panning or dragging | |

Swiping (in any direction) | |

Rotating (fingers moving in opposite directions) | |

Long press (also known as “touch and hold”) |

Before you decide to use a gesture recognizer, consider how you are going to use it. Respond to gestures only in ways that users expect. For example, a pinching gesture should scale a view, zooming it in and out; it should not be interpreted as, say, a selection request, for which a tap is more appropriate. For guidelines about the proper use of gestures, see iPhone Human Interface Guidelines.

Gestures Recognizers Are Attached to a View

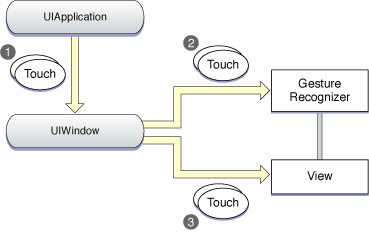

To detect its gestures, a gesture recognizer must be attached to the view that a user is touching. This view is known as the hit-tested view. Recall that events in iOS are represented by represented by UIEvent objects, and each event object encapsulates the UITouch objects of the current multitouch sequence. A set of those UITouch objects is specific to a given phase of a multitouch sequence. Delivery of events initially follows the usual path: from operating system to the application object to the window object representing the window in which the touches are occurring. But before sending an event to the hit-tested view, the window object sends it to the gesture recognizer attached to that view or to any of that view’s subviews. Figure 3-1 illustrates this general path, with the numbers indicating the order in which touches are received.

Thus gesture recognizers act as observers of touch objects sent to their attached view or view hierarchy. However, they are not part of that view hierarchy and do not participate in the responder chain. Gesture recognizers may delay the delivery of touch objects to the view while they are recognizing gestures, and by default they cancel delivery of remaining touch objects to the view once they recognize their gesture. For more on the possible scenarios of event delivery from a gesture recognizer to its view, see “Regulating the Delivery of Touches to Views.”

For some gestures, the locationInView: and the locationOfTouch:inView: methods of UIGestureRecognizer enable clients to find the location of gestures or specific touches in the attached view or its subviews. See “Responding to Gestures” for more information.

Gestures Trigger Action Messages

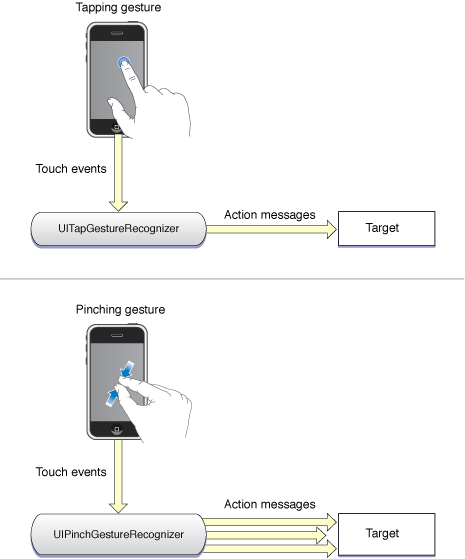

When a gesture recognizer recognizes its gesture, it sends one or more action messages to one or more targets. When you create a gesture recognizer, you initialize it with an action and a target. You may add more target-action pairs to it thereafter. The target-action pairs are not additive; in other words, an action is only sent to the target it was originally linked with, and not to other targets (unless they’re specified in another target-action pair).

Discrete Gestures and Continuous Gestures

When a gesture recognizer recognizes a gesture, it sends either a single action message to its target or multiple action messages until the gesture ends. This behavior is determined by whether the gesture is discrete or continuous. A discrete gesture, such as a double-tap, happens just once; when a gesture recognizer recognizes a discrete gesture, it sends its target a single action message. A continuous gesture, such as pinching, takes place over a period and ends when the user lifts the final finger in the multitouch sequence. The gesture recognizer sends action messages to its target at short intervals until the multitouch sequence ends.

The reference documents for the gesture-recognizer classes note whether the instances of the class detect discrete or continuous gestures.

Implementing Gesture Recognition

To implement gesture recognition, you create a gesture-recognizer instance to which you assign a target, action, and, in some cases, gesture-specific attributes. You attach this object to a view and then implement the action method in your target object that handles the gesture.

Preparing a Gesture Recognizer

To create a gesture recognizer, you must allocate and initialize an instance of a concrete UIGestureRecognizer subclass. When you initialize it, specify a target object and an action selector, as in the following code:

UITapGestureRecognizer *doubleFingerDTap = [[UITapGestureRecognizer alloc] |

initWithTarget:self action:@selector(handleDoubleDoubleTap:)]; |

The action methods for handling gestures—and the selector for identifying them—are expected to conform to one of two signatures:

- (void)handleGesture- (void)handleGesture:(UIGestureRecognizer *)sender

where handleGesture and sender can be any name you choose. Methods having the second signature allow the target to query the gesture recognizer for addition information. For example, the target of a UIPinchGestureRecognizer object can ask that object for the current scale factor related to the pinching gesture.

After you create a gesture recognizer, you must attach it to the view receiving touches—that is, the hit-test view—using the UIView method addGestureRecognizer:. You can find out what gesture recognizers a view currently has attached through the gestureRecognizers property, and you can detach a gesture recognizer from a view by calling removeGestureRecognizer:.

The sample method in Listing 3-1 creates and initializes three gesture recognizers: a single-finger double-tap, a panning gesture, and a rotation gesture. It then attaches each gesture-recognizer object to the same view. For the singleFingerDTap object, the code specifies that two taps are required for the gesture to be recognized. Each method adds the created gesture recognizer to a view and then releases it (because the view now retains it).

Listing 3-1 Creating and initializing discrete and continuous gesture recognizers

- (void)createGestureRecognizers { |

UITapGestureRecognizer *singleFingerDTap = [[UITapGestureRecognizer alloc] |

initWithTarget:self action:@selector(handleSingleDoubleTap:)]; |

singleFingerDTap.numberOfTapsRequired = 2; |

[self.theView addGestureRecognizer:singleFingerDTap]; |

[singleFingerDTap release]; |

UIPanGestureRecognizer *panGesture = [[UIPanGestureRecognizer alloc] |

initWithTarget:self action:@selector(handlePanGesture:)]; |

[self.theView addGestureRecognizer:panGesture]; |

[panGesture release]; |

UIPinchGestureRecognizer *pinchGesture = [[UIPinchGestureRecognizer alloc] |

initWithTarget:self action:@selector(handlePinchGesture:)]; |

[self.theView addGestureRecognizer:pinchGesture]; |

[pinchGesture release]; |

} |

You may also add additional targets and actions to a gesture recognizer using the addTarget:action: method of UIGestureRecognizer. Remember that action messages for each target and action pair are restricted to that pair; if you have multiple targets and actions, they are not additive.

Responding to Gestures

To handle a gesture, the target for the gesture recognizer must implement a method corresponding to the action selector specified when you initialized the gesture recognizer. For discrete gestures, such as a tapping gesture, the gesture recognizer invokes the method once per recognition; for continuous gestures, the gesture recognizer invokes the method at repeated intervals until the gesture ends (that is, the last finger is lifted from the gesture recognizer’s view).

In gesture-handling methods, the target object often gets additional information about the gesture from the gesture recognizer; it does this by obtaining the value of a property defined by the gesture recognizer, such as scale (for scale factor) or velocity. It can also query the gesture recognizer (in appropriate cases) for the location of the gesture.

Listing 3-2 shows handlers for two continuous gestures: a pinching gesture (handlePinchGesture:) and a panning gesture (handlePanGesture:). It also gives an example of a handler for a discrete gesture; in this example, when the user double-taps the view with a single finger, the handler (handleSingleDoubleTap:) centers the view at the location of the double-tap.

Listing 3-2 Handling pinch, pan, and double-tap gestures

- (IBAction)handlePinchGesture:(UIGestureRecognizer *)sender { |

CGFloat factor = [(UIPinchGestureRecognizer *)sender scale]; |

self.view.transform = CGAffineTransformMakeScale(factor, factor); |

} |

- (IBAction)handlePanGesture:(UIPanGestureRecognizer *)sender { |

CGPoint translate = [sender translationInView:self.view]; |

CGRect newFrame = currentImageFrame; |

newFrame.origin.x += translate.x; |

newFrame.origin.y += translate.y; |

sender.view.frame = newFrame; |

if (sender.state == UIGestureRecognizerStateEnded) |

currentImageFrame = newFrame; |

} |

- (IBAction)handleSingleDoubleTap:(UIGestureRecognizer *)sender { |

CGPoint tapPoint = [sender locationInView:sender.view.superview]; |

[UIView beginAnimations:nil context:NULL]; |

sender.view.center = tapPoint; |

[UIView commitAnimations]; |

} |

These action methods handle the gestures in distinctive ways:

In the

handlePinchGesture:method, the target communicates with its gesture recognizer (sender) to get the scale factor (scale). The method uses the scale value in a Core Graphics function that scales the view and assigns the computed value to the view’s affinetransformproperty.The

handlePanGesture:method applies thetranslationInView:values obtained from its gesture recognizer to a cached frame value for the attached view. When the gesture concludes, it caches the newest frame value.In the

handleSingleDoubleTap:method, the target gets the location of the double-tap gesture from its gesture recognizer by calling thelocationInView:method. It then uses this point, converted to superview coordinates, to animate the center of the view to the location of the double-tap.

The scale factor obtained in the handlePinchGesture: method, as with the rotation angle and the translation value related to other recognizers of continuous gestures, is to be applied to the state of the view when the gesture is first recognized. It is not a delta value to be concatenated over each handler invocation for a given gesture.

A hit-test with an attached gesture recognizer does not have to be passive when there are incoming touch events. Instead, it can determine which gesture recognizers, if any, are involved with a particular UITouch object by querying the gestureRecognizers property. Similarly, it can find out which touches a given gesture recognizer is analyzing for a given event by calling the UIEvent method touchesForGestureRecognizer:.

Interacting with Other Gesture Recognizers

More than one gesture recognizer may be attached to a view. In the default behavior, touch events in a multitouch sequence go from one gesture recognizer to another in a nondeterministic order until the events are finally delivered to the view (if at all). Often this default behavior is what you want. But sometimes you might want one or more of the following behaviors:

Have one gesture recognizer fail before another can start analyzing touch events.

Prevent other gesture recognizers from analyzing a specific multitouch sequence or a touch object in that sequence.

Permit two gesture recognizers to operate simultaneously.

The UIGestureRecognizer class provides client methods, delegate methods, and methods overridden by subclasses to enable you to effect these behaviors.

Requiring a Gesture Recognizer to Fail

You might want a relationship between two gesture recognizers so that one can operate only if the other one fails. For example, recognizer A doesn’t begin analyzing a multitouch sequence until recognizer B fails and, conversely, if recognizer B does recognize its gesture, recognizer A never looks at the multitouch sequence. An example where you might specify this relationship is when you have a gesture recognizer for a single tap and another gesture recognizer for a double tap; the single-tap recognizer requires the double-tap recognizer to fail before it begins operating on a multitouch sequence.

The method you call to specify this relationship is requireGestureRecognizerToFail:. After sending the message, the receiving gesture recognizer must stay in the UIGestureRecognizerStatePossible state until the specified gesture recognizer transitions to UIGestureRecognizerStateFailed. If the specified gesture recognizer transitions to UIGestureRecognizerStateRecognized or UIGestureRecognizerStateBegan instead, then the receiving recognizer can proceed, but no action message is sent if it recognizes its gesture.

Note: In the case of the single-tap versus double-tap gestures, if a single-tap gesture recognizer doesn’t require the double-tap recognizer to fail, you should expect to receive your single-tap actions before your double-tap actions, even in the case of a double tap. This is expected and desirable behavior because the best user experience generally involves stackable actions. If you want double-tap and single-tap gesture recognizers to have mutually exclusive actions, you can require the double-tap recognizer to fail. You won't get any single-tap actions on a double tap, but any single-tap actions you do receive will necessarily lag behind the user's touch input. In other words, there is no way to know if the user double tapped until after the double-tap delay, so the single-tap gesture recognizer cannot send its action until that delay has passed.

For a discussion of gesture-recognition states and possible transition between these states, see “State Transitions.”

Preventing Gesture Recognizers from Analyzing Touches

You can prevent gesture recognizers from looking at specific touches or from even recognizing a gesture. You can specify these “prevention” relationships using either delegation methods or overriding methods declared by the UIGestureRecognizer class.

The UIGestureRecognizerDelegateprotocol declares two optional methods that prevent specific gesture recognizers from recognizing gestures on a case-by-case basis:

gestureRecognizerShouldBegin:— This method is called when a gesture recognizer attempts to transition out ofUIGestureRecognizerStatePossible. ReturnNOto make it transition toUIGestureRecognizerStateFailedinstead. (The default value isYES.)gestureRecognizer:shouldReceiveTouch:—This method is called before the window object callstouchesBegan:withEvent:on the gesture recognizer when there are one or more new touches. ReturnNOto prevent the gesture recognizer from seeing the objects representing these touches. (The default value isYES.)

In addition, there are two UIGestureRecognizer methods (declared in UIGestureRecognizerSubclass.h) that effect the same behavior as these delegation methods. A subclass can override these methods to define class-wide prevention rules:

- (BOOL)canPreventGestureRecognizer:(UIGestureRecognizer *)preventedGestureRecognizer; |

- (BOOL)canBePreventedByGestureRecognizer:(UIGestureRecognizer *)preventingGestureRecognizer; |

Permitting Simultaneous Gesture Recognition

By default, no two gesture recognizers can attempt to recognize their gestures simultaneously. But you can change this behavior by implementing gestureRecognizer:shouldRecognizeSimultaneouslyWithGestureRecognizer:, an optional method of the UIGestureRecognizerDelegateprotocol. This method is called when the recognition of the receiving gesture recognizer would block the operation of the specified gesture recognizer, or vice versa. Return YES to allow both gesture recognizers to recognize their gestures simultaneously.

Note: Returning YES is guaranteed to allow simultaneous recognition, but returning NO is not guaranteed to prevent simultaneous recognition because the other gesture's delegate may return YES.

Regulating the Delivery of Touches to Views

Generally, a window delivers UITouch objects (packaged in UIEvent objects) to a gesture recognizer before it delivers them to the attached hit-test view. But there are some subtle detours and dead-ends in this general delivery path that depend on whether a gesture is recognized. You can alter this delivery path to suit the requirements of your application.

Default Touch-Event Delivery

By default a window in a multitouch sequence delays the delivery of touch objects in Ended phases to the hit-test view and, if the gesture is recognized, both prevents the delivery of current touch objects to the view and cancels touch objects previously received by the view. The exact behavior depends on the phase of touch objects and on whether a gesture recognizer recognizes its gesture or fails to recognize it in a multitouch sequence.

To clarify this behavior, consider a hypothetical gesture recognizer for a discrete gesture involving two touches (that is, two fingers). Touch objects enter a system and are passed from the UIApplication object to the UIWindow object for the hit-test view. The following sequence occurs when the gesture is recognized:

The window sends two touch objects in the Began phase (

UITouchPhaseBegan) to the gesture recognizer, which doesn’t recognize the gesture. The window sends these same touches to the view attached to the gesture recognizer.The window sends two touch objects in the Moved phase (

UITouchPhaseMoved) to the gesture recognizer, and the recognizer still doesn’t detect its gesture. The window then sends these touches to the attached view.The window sends one touch object in the Ended phase (

UITouchPhaseEnded) to the gesture recognizer. This touch object doesn’t yield enough information for the gesture, but the window withholds the object from the attached view.The window sends the other touch object in the Ended phase. The gesture recognizer now recognizes its gesture and so it sets its state to

UIGestureRecognizerStateRecognized. Just before the first (or only) action message is sent, the view receives atouchesCancelled:withEvent:message to invalidate the touch objects previously sent (in the Began and Moved phases). The touches in the Ended phase are canceled.

Now assume that the gesture recognizer in the last step instead decides that this multitouch sequence it’s been analyzing is not its gesture. It sets its state to UIGestureRecognizerStateFailed. The window then sends the two touch objects in the Ended phase to the attached view in a touchesEnded:withEvent: message.

A gesture recognizer for a continuous gesture goes through a similar sequence, except that it is more likely to recognize its gesture before touch objects reach the Ended phase. Upon recognizing its gesture, it sets its state to UIGestureRecognizerStateBegan. The window sends all subsequent touch objects in the multitouch sequence to the gesture recognizer but not to the attached view.

Note: For a discussion of gesture-recognition states and possible transition between these states, see “State Transitions.”

Affecting the Delivery of Touches to Views

You can change the values of three UIGestureRecognizerproperties to alter the default delivery path of touch objects to views in certain ways. These properties and their default values are:

cancelsTouchesInView(default ofYES)delaysTouchesBegan(default ofNO)delaysTouchesEnded(default ofYES)

If you change the default values of these properties, you get the following differences in behavior:

cancelsTouchesInViewset toNO— CausestouchesCancelled:withEvent:to not be sent to the view for any touches belonging to the recognized gesture. As a result, any touch objects in Began or Moved phases previously received by the attached view are not invalidated.delaysTouchesBeganset toYES— Ensures that when a gesture recognizer recognizes a gesture, no touch objects that were part of that gesture are delivered to the attached view. This setting provides a behavior similar to that offered by thedelaysContentTouchesproperty onUIScrollView; in this case, when scrolling begins soon after the touch begins, subviews of the scroll-view object never receive the touch, so there is no flash of visual feedback. You should be careful about this setting because it can easily make your interface feel unresponsive.delaysTouchesEndedset toNO— Prevents a gesture recognizer that's recognized its gesture after a touch has ended from canceling that touch on the view. For example, say a view has aUITapGestureRecognizerobject attached with itsnumberOfTapsRequiredset to 2, and the user double-taps the view. If this property is set toNO, the view gets the following sequence of messages:touchesBegan:withEvent:,touchesEnded:withEvent:,touchesBegan:withEvent:, andtouchesCancelled:withEvent:. With the property set toYES, the view getstouchesBegan:withEvent:,touchesBegan:withEvent:,touchesCancelled:withEvent:, andtouchesCancelled:withEvent:. The purpose of this property is to ensure that a view won't complete an action as a result of a touch that the gesture will want to cancel later.

Creating Custom Gesture Recognizers

If you are going to create a custom gesture recognizer, you need to have a clear understanding of how gesture recognizers work. The following section gives you the architectural background of gesture recognition, and the subsequent section goes into details of actually creating a gesture recognizer.

State Transitions

Gesture recognizers operate in a predefined state machine. They transition from one state to another depending on whether certain conditions apply. The following enum constants from UIGestureRecognizer.h define the states for gesture recognizers:

typedef enum { |

UIGestureRecognizerStatePossible, |

UIGestureRecognizerStateBegan, |

UIGestureRecognizerStateChanged, |

UIGestureRecognizerStateEnded, |

UIGestureRecognizerStateCancelled, |

UIGestureRecognizerStateFailed, |

UIGestureRecognizerStateRecognized = UIGestureRecognizerStateEnded |

} UIGestureRecognizerState; |

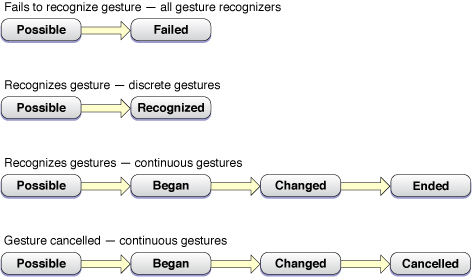

The sequence of states that a gesture recognizer may transition through varies, depending on whether a discrete or continuous gesture is being recognized. All gesture recognizers start in the Possible state (UIGestureRecognizerStatePossible). They then analyze the multitouch sequence targeted at their attached hit-test view, and they either recognize their gesture or fail to recognize it. If a gesture recognizer does not recognize its gesture, it transitions to the Failed state(UIGestureRecognizerStateFailed); this is true of all gesture recognizers, regardless of whether the gesture is discrete or continuous.

When a gesture is recognized, however, the state transitions differ for discrete and continuous gestures. A recognizer for a discrete gesture transitions from Possible to Recognized (UIGestureRecognizerStateRecognized). A recognizer for a continuous gesture, on the other hand, transitions from Possible to Began (UIGestureRecognizerStateBegan) when it first recognizes the gesture. Then it transitions from Began to Changed (UIGestureRecognizerStateChanged), and subsequently from Changed to Changed every time there is a change in the gesture. Finally, when the last finger in the multitouch sequence is lifted from the hit-test view, the gesture recognizer transitions to the Ended state (UIGestureRecognizerStateEnded), which is an alias for the UIGestureRecognizerStateRecognized state. A recognizer for a continuous gesture can also transition from the Changed state to a Cancelled state (UIGestureRecognizerStateCancelled) if it determines that the recognized gesture no longer fits the expected pattern for its gesture. Figure 3-3 illustrates these transitions.

Note: The Began, Changed, Ended, and Cancelled states are not necessarily associated with UITouch objects in corresponding touch phases. They strictly denote the phase of the gesture itself, not the touch objects that are being recognized.

When a gesture is recognized, every subsequent state transition causes an action message to be sent to the target. When a gesture recognizer reaches the Recognized or Ended state, it is asked to reset its internal state in preparation for a new attempt at recognizing the gesture. The UIGestureRecognizer class then sets the gesture recognizer’s state back to Possible.

Implementing a Custom Gesture Recognizer

To implement a custom gesture recognizer, first create a subclass of UIGestureRecognizer in Xcode. Then, add the following import directive in your subclass’s header file:

#import <UIKit/UIGestureRecognizerSubclass.h> |

Next copy the following method declarations from UIGestureRecognizerSubclass.h to your header file; these are the methods you override in your subclass:

- (void)reset; |

- (void)touchesBegan:(NSSet *)touches withEvent:(UIEvent *)event; |

- (void)touchesMoved:(NSSet *)touches withEvent:(UIEvent *)event; |

- (void)touchesEnded:(NSSet *)touches withEvent:(UIEvent *)event; |

- (void)touchesCancelled:(NSSet *)touches withEvent:(UIEvent *)event; |

You must be sure to call the superclass implementation (super) in all of the methods you override.

Examine the declaration of the stateproperty in UIGestureRecognizerSubclass.h. Notice that it is now given a readwrite option instead of readonly (in UIGestureRecognizer.h). Your subclass can now change its state by assigning UIGestureRecognizerState constants to the property.

The UIGestureRecognizer class sends action messages for you and controls the delivery of touch objects to the hit-test view. You do not need to implement these tasks yourself.

Implementing the Multitouch Event-Handling Methods

The heart of the implementation for a gesture recognizer are the four methods touchesBegan:withEvent:, touchesMoved:withEvent:, touchesEnded:withEvent:, and touchesCancelled:withEvent:. You implement these methods much as you would implement them for a custom view.

Note: See “Handling Multi-Touch Events” in iOS Application Programming Guide in “Document Revision History” for information about handling events delivered during a multitouch sequence.

The main difference in the implementation of these methods for a gesture recognizer is that you transition between states at the appropriate moment. To do this, you must set the value of the state property to the appropriate UIGestureRecognizerState constant. When a gesture recognizer recognizes a discrete gesture, it sets the state property to UIGestureRecognizerStateRecognized. If the gesture is continuous, it sets the state property first to UIGestureRecognizerStateBegan; then, for each change in position of the gesture, it sets (or resets) the property to UIGestureRecognizerStateChanged. When the gesture ends, it sets state to UIGestureRecognizerStateEnded. If at any point a gesture recognizer realizes that this multitouch sequence is not its gesture, it sets its state to UIGestureRecognizerStateFailed.

Listing 3-3 is an implementation of a gesture recognizer for a discrete single-touch “checkmark” gesture (actually any V-shaped gesture). It records the midpoint of the gesture—the point at which the upstroke begins—so that clients can obtain this value.

Listing 3-3 Implementation of a “checkmark” gesture recognizer.

- (void)touchesBegan:(NSSet *)touches withEvent:(UIEvent *)event { |

[super touchesBegan:touches withEvent:event]; |

if ([touches count] != 1) { |

self.state = UIGestureRecognizerStateFailed; |

return; |

} |

} |

- (void)touchesMoved:(NSSet *)touches withEvent:(UIEvent *)event { |

[super touchesMoved:touches withEvent:event]; |

if (self.state == UIGestureRecognizerStateFailed) return; |

CGPoint nowPoint = [[touches anyObject] locationInView:self.view]; |

CGPoint prevPoint = [[touches anyObject] previousLocationInView:self.view]; |

if (!strokeUp) { |

// on downstroke, both x and y increase in positive direction |

if (nowPoint.x >= prevPoint.x && nowPoint.y >= prevPoint.y) { |

self.midPoint = nowPoint; |

// upstroke has increasing x value but decreasing y value |

} else if (nowPoint.x >= prevPoint.x && nowPoint.y <= prevPoint.y) { |

strokeUp = YES; |

} else { |

self.state = UIGestureRecognizerStateFailed; |

} |

} |

} |

- (void)touchesEnded:(NSSet *)touches withEvent:(UIEvent *)event { |

[super touchesEnded:touches withEvent:event]; |

if ((self.state == UIGestureRecognizerStatePossible) && strokeUp) { |

self.state = UIGestureRecognizerStateRecognized; |

} |

} |

- (void)touchesCancelled:(NSSet *)touches withEvent:(UIEvent *)event { |

[super touchesCancelled:touches withEvent:event]; |

self.midPoint = CGPointZero; |

strokeUp = NO; |

self.state = UIGestureRecognizerStateFailed; |

} |

If a gesture recognizer detects a touch (as represented by a UITouch object) that it determines is not part of its gesture, it can pass it on directly to its view. To do this, it calls ignoreTouch:forEvent: on itself, passing in the touch object. Ignored touches are not withheld from the attached view even if the value of the cancelsTouchesInView property is YES.

Resetting State

When your gesture recognizer transitions to either the UIGestureRecognizerStateRecognized state or theUIGestureRecognizerStateEnded state, the UIGestureRecognizer class calls the reset method of the gesture recognizer just before it winds back the gesture recognizer’s state to UIGestureRecognizerStatePossible. A gesture recognizer class should implement this method to reset any internal state so that it is ready for a new attempt at recognizing the gesture. After a gesture recognizer returns from this method, it receives no further updates for touches that have already begun but haven’t ended.

Listing 3-4 Resetting a gesture recognizer

- (void)reset { |

[super reset]; |

self.midPoint = CGPointZero; |

strokeUp = NO; |

} |

Last updated: 2010-08-03