Multitouch Events

Note: This chapter contains information that used to be in iPhone Application Programming Guide. The information in this chapter has not been updated specifically for iOS 4.0.

Touch events in iOS are based on a Multi-Touch model. Instead of using a mouse and a keyboard, users touch the screen of the device to manipulate objects, enter data, and otherwise convey their intentions. iOS recognizes one or more fingers touching the screen as part of a multitouch sequence. This sequence begins when the first finger touches down on the screen and ends when the last finger is lifted from the screen. iOS tracks fingers touching the screen throughout a multitouch sequence and records the characteristics of each of them, including the location of the finger on the screen and the time the touch occurred. Applications often recognize certain combinations of touches as gestures and respond to them in ways that are intuitive to users, such as zooming in on content in response to a pinching gesture and scrolling through content in response to a flicking gesture.

Notes: A finger on the screen affords a much different level of precision than a mouse pointer. When a user touches the screen, the area of contact is actually elliptical and tends to be offset below the point where the user thinks he or she touched. This “contact patch” also varies in size and shape based on which finger is touching the screen, the size of the finger, the pressure of the finger on the screen, the orientation of the finger, and other factors. The underlying Multi-Touch system analyzes all of this information for you and computes a single touch point.

iOS 4.0 still reports touches on iPhone 4 (and on future high-resolution devices) in a 320x480 coordinate space to maintain source compatibility, but the resolution is twice as high in each dimension for applications built for iOS 4.0 and later releases. In concrete terms, that means that touches for applications built for iOS 4 running on iPhone 4 can land on half-point boundaries where on older devices they land only on full point boundaries. If you have any round-to-integer code in your touch-handling path you may lose this precision.

Many classes in UIKit handle multitouch events in ways that are distinctive to objects of the class. This is especially true of subclasses of UIControl, such as UIButton and UISlider. Objects of these subclasses—known as control objects—are receptive to certain types of gestures, such as a tap or a drag in a certain direction; when properly configured, they send an action message to a target object when that gesture occurs. Other UIKit classes handle gestures in other contexts; for example, UIScrollView provides scrolling behavior for table views, text views, and other views with large content areas.

Some applications may not need to handle events directly; instead, they can rely on the classes of UIKit for that behavior. However, if you create a custom subclass of UIView—a common pattern in iOS development—and if you want that view to respond to certain touch events, you need to implement the code required to handle those events. Moreover, if you want a UIKit object to respond to events differently, you have to create a subclass of that framework class and override the appropriate event-handling methods.

Events and Touches

In iOS, a touch is the presence or movement of a finger on the screen that is part of a unique multitouch sequence. For example, a pinch-close gesture has two touches: two fingers on the screen moving toward each other from opposite directions. There are simple single-finger gestures, such as a tap, or a double-tap, a drag, or a flick (where the user quickly swipes a finger across the screen). An application might recognize even more complicated gestures; for example, an application might have a custom control in the shape of a dial that users “turn” with multiple fingers to fine-tune some variable.

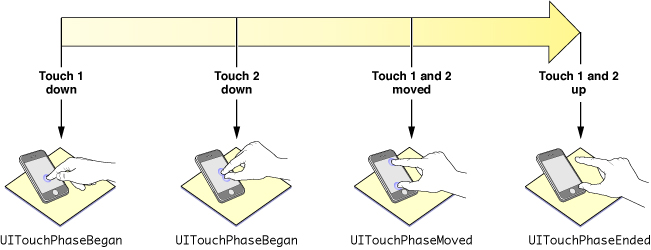

A UIEvent object of type UIEventTypeTouches represents a touch event. The system continually sends these touch-event objects (or simply, touch events) to an application as fingers touch the screen and move across its surface. The event provides a snapshot of all touches during a multitouch sequence, most importantly the touches that are new or have changed for a particular view. As depicted in Figure 2-1, a multitouch sequence begins when a finger first touches the screen. Other fingers may subsequently touch the screen, and all fingers may move across the screen. The sequence ends when the last of these fingers is lifted from the screen. An application receives event objects during each phase of any touch.

Touches, which are represented by UITouch objects, have both temporal and spatial aspects. The temporal aspect, called a phase, indicates when a touch has just begun, whether it is moving or stationary, and when it ends—that is, when the finger is lifted from the screen.

The spatial aspect of touches concerns their association with the object in which they occur as well as their location in it. When a finger touches the screen, the touch is associated with the underlying window and view and maintains that association throughout the life of the event. If multiple touches arrive at once, they are treated together only if they are associated with the same view. Likewise, if two touches arrive in quick succession, they are treated as a multiple tap only if they are associated with the same view. A touch object stores the current location and previous location (if any) of the touch in its view or window.

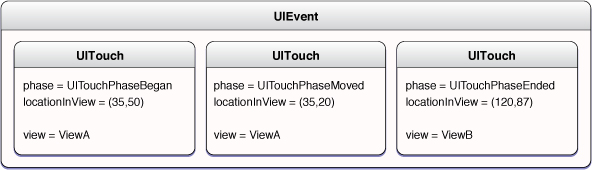

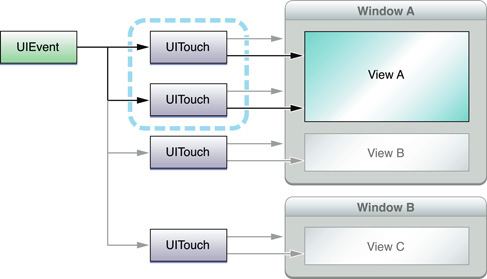

An event object contains all touch objects for the current multitouch sequence and can provide touch objects specific to a view or window (see Figure 2-2). A touch object is persistent for a given finger during a sequence, and UIKit mutates it as it tracks the finger throughout it. The touch attributes that change are the phase of the touch, its location in a view, its previous location, and its timestamp. Event-handling code may evaluate these attributes to determine how to respond to a touch event.

Because the system can cancel a multitouch sequence at any time, an event-handling application must be prepared to respond appropriately. Cancellations can occur as a result of overriding system events, such as an incoming phone call.

Approaches for Handling Touch Events

Most applications that are interested in users’ touches on their custom views are interested in detecting and handling well-established gestures. These gestures include tapping (one or multiple times), pinching (to zoom a view in or out), swiping , panning or dragging a view, and using two fingers to rotate a view.

You could implement the touch-event handling code to recognize and handle these gestures, but that code would be complex, possibly buggy, and take some time to write. Alternatively, you could simplify the interpretation and handling of common gestures by using one of the gesture recognizer classes introduced in iOS 3.2. To use a gesture recognizer, you instantiate it, attach it to the view receiving touches, configure it, and assign it an action selector and a target object. When the gesture recognizer recognizes its gesture, it sends an action message to the target, allowing the target to respond to the gesture.

You can implement a custom gesture recognizer by subclassing UIGestureRecognizer. A custom gesture recognizer requires you to analyze the stream of events in a multitouch sequence to recognize your distinct gesture; to do this, you should be familiar with the information in this chapter.

For information about gesture recognizers, see “Gesture Recognizers.”

Regulating Touch Event Delivery

UIKit gives applications programmatic means to simplify event handling or to turn off the stream of UIEvent objects completely. The following list summarizes these approaches:

Turning off delivery of touch events. By default, a view receives touch events, but you can set its

userInteractionEnabledproperty toNOto turn off delivery of touch events. A view also does not receive these events if it’s hidden or if it’s transparent.Turning off delivery of touch events for a period. An application can call the

UIApplicationmethodbeginIgnoringInteractionEventsand later call theendIgnoringInteractionEventsmethod. The first method stops the application from receiving touch events entirely; the second method is called to resume the receipt of such events. You sometimes want to turn off event delivery while your code is performing animations.Turning on delivery of multiple touches. By default, a view ignores all but the first touch during a multitouch sequence. If you want the view to handle multiple touches you must enable this capability for the view. You do this programmatically by setting the

multipleTouchEnabledproperty of your view toYES, or in Interface Builder by setting the related attribute in the inspector for the related view.Restricting event delivery to a single view. By default, a view’s

exclusiveTouchproperty is set toNO, which means that this view does not block other views in a window from receiving touches. If you set the property toYES, you mark the view so that, if it is tracking touches, it is the only view in the window that is tracking touches. Other views in the window cannot receive those touches. However, a view that is marked “exclusive touch” does not receive touches that are associated with other views in the same window. If a finger contacts an exclusive-touch view, then that touch is delivered only if that view is the only view tracking a finger in that window. If a finger touches a non-exclusive view, then that touch is delivered only if there is not another finger tracking in an exclusive-touch view.Restricting event delivery to subviews. A custom

UIViewclass can overridehitTest:withEvent:to restrict the delivery of multitouch events to its subviews. See “Hit-Testing” for a discussion of this technique.

Handling Multitouch Events

To handle multitouch events, you must first create a subclass of a responder class. This subclass could be any one of the following:

A custom view (subclass of

UIView)A subclass of

UIViewControlleror one of its UIKit subclassesA subclass of a UIKit view or control class, such as

UIImageVieworUISliderA subclass of

UIApplicationorUIWindow(although this would be rare)

A view controller typically receives, via the responder chain, touch events initially sent to its view.

For instances of your subclass to receive multitouch events, your subclass must implement one or more of the UIResponder methods for touch-event handling, described below. in addition, the view must be visible (neither transparent or hidden) and must have its userInteractionEnabled property set to YES, which is the default.

The following sections describe the touch-event handling methods, describe approaches for handling common gestures, show an example of a responder object that handles a complex sequence of multitouch events, discuss event forwarding, and suggest some techniques for event handling.

The Event-Handling Methods

During a multitouch sequence, the application dispatches a series of event messages to the target responder. To receive and handle these messages, the class of a responder object must implement at least one of the following methods declared by UIResponder, and, in some cases, all of these methods:

- (void)touchesBegan:(NSSet *)touches withEvent:(UIEvent *)event; |

- (void)touchesMoved:(NSSet *)touches withEvent:(UIEvent *)event; |

- (void)touchesEnded:(NSSet *)touches withEvent:(UIEvent *)event; |

- (void)touchesCancelled:(NSSet *)touches withEvent:(UIEvent *)event |

The application sends these messages when there are new or changed touches for a given touch phase:

It sends the

touchesBegan:withEvent:message when one or more fingers touch down on the screen.It sends the

touchesMoved:withEvent:message when one or more fingers move.It sends the

touchesEnded:withEvent:message when one or more fingers lift up from the screen.It sends the

touchesCancelled:withEvent:message when the touch sequence is cancelled by a system event, such as an incoming phone call.

Each of these methods is associated with a touch phase; for example, touchesBegan:withEvent: is associated with UITouchPhaseBegan. You can get the phase of any UITouch object by evaluating its phaseproperty.

Each message that invokes an event-handling method passes in two parameters. The first is a set of UITouch objects that represent new or changed touches for the given phase. The second parameter is a UIEvent object representing this particular event. From the event object you can get all touch objects for the event or a subset of those touch objects filtered for specific views or windows. Some of these touch objects represent touches that have not changed since the previous event message or that have changed but are in different phases.

Basics of Touch-Event Handling

You frequently handle an event for a given phase by getting one or more of the UITouch objects in the passed-in set, evaluating their properties or getting their locations, and proceeding accordingly. The objects in the set represent those touches that are new or have changed for the phase represented by the implemented event-handling method. If any of the touch objects will do, you can send the NSSet object an anyObject message; this is the case when the view receives only the first touch in a multitouch sequence (that is, the multipleTouchEnabled property is set to NO).

An important UITouch method is locationInView:, which, if passed a parameter of self, yields the location of the touch in the coordinate system of the receiving view. A parallel method tells you the previous location of the touch (previousLocationInView:). Properties of the UITouch instance tell you how many taps have been made (tapCount), when the touch was created or last mutated (timestamp), and what phase it is in (phase).

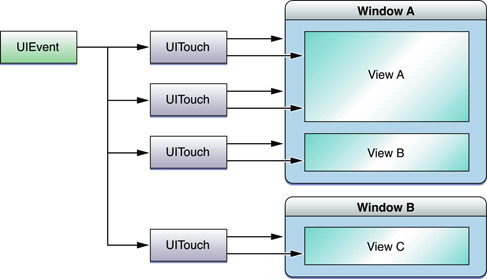

If for some reason you are interested in touches in the current multitouch sequence that have not changed since the last phase or that are in a phase other than the ones in the passed-in set, you can request them from the passed-in UIEvent object. The diagram in Figure 2-3 depicts a UIEvent object that contains four touch objects. To get all these touch objects, you would invoke the allTouches on the event object.

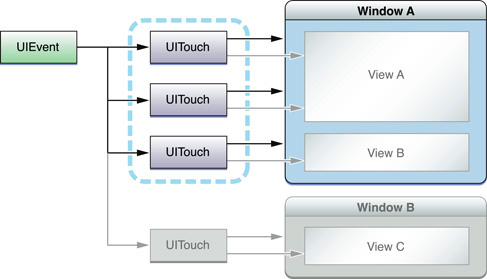

If on the other hand you are interested in only those touches associated with a specific window (Window A in Figure 2-4), you would send the UIEvent object a touchesForWindow: message.

If you want to get the touches associated with a specific view, you would call touchesForView: on the event object, passing in the view object (View A in Figure 2-5).

If a responder creates persistent objects while handling events during a multitouch sequence, it should implement touchesCancelled:withEvent: to dispose of those objects when the system cancels the sequence. Cancellation often occurs when an external event—for example, an incoming phone call—disrupts the current application’s event processing. Note that a responder object should also dispose of those same objects when it receives the last touchesEnded:withEvent: message for a multitouch sequence. (See “Forwarding Touch Events” to find out how to determine the last UITouchPhaseEnded touch object in a multitouch sequence.)

Important: If your custom responder class is a subclass of UIView or UIViewController, you should implement all of the methods described in “The Event-Handling Methods.” If your class is a subclass of any other UIKit responder class, you do not need to override all of the event-handling methods; however, in those methods that you do override, be sure to call the superclass implementation of the method (for example, super touchesBegan:touches withEvent:theEvent];). The reason for this guideline is simple: All views that process touches, including your own, expect (or should expect) to receive a full touch-event stream. If you prevent a UIKit responder object from receiving touches for a certain phase of an event, the resulting behavior may be undefined and probably undesirable.

Handling Tap Gestures

A very common gesture in iOS applications is the tap: the user taps an object on the screen with his or her finger. A responder object can handle a single tap in one way, a double-tap in another, and possibly a triple-tap in yet another way. To determine the number of times the user tapped a responder object, you get the value of the tapCountproperty of a UITouch object.

The best places to find this value are the methods touchesBegan:withEvent: and touchesEnded:withEvent:. In many cases, the latter method is preferred because it corresponds to the touch phase in which the user lifts a finger from a tap. By looking for the tap count in the touch-up phase (UITouchPhaseEnded), you ensure that the finger is really tapping and not, for instance, touching down and then dragging.

Listing 2-1 shows how to determine whether a double-tap occurred in one of your views.

Listing 2-1 Detecting a double-tap gesture

- (void)touchesBegan:(NSSet *)touches withEvent:(UIEvent *)event { |

} |

- (void)touchesMoved:(NSSet *)touches withEvent:(UIEvent *)event { |

} |

- (void)touchesEnded:(NSSet *)touches withEvent:(UIEvent *)event { |

for (UITouch *touch in touches) { |

if (touch.tapCount >= 2) { |

[self.superview bringSubviewToFront:self]; |

} |

} |

} |

- (void)touchesCancelled:(NSSet *)touches withEvent:(UIEvent *)event { |

} |

A complication arises when a responder object wants to handle a single-tap and a double-tap gesture in different ways. For example, a single tap might select the object and a double tap might display a view for editing the item that was double-tapped. How is the responder object to know that a single tap is not the first part of a double tap? Listing 2-2 illustrates an implementation of the event-handling methods that increases the size of the receiving view upon a double-tap gesture and decreases it upon a single-tap gesture.

The following is a commentary on this code:

In

touchesEnded:withEvent:, when the tap count is one, the responder object sends itself aperformSelector:withObject:afterDelay:message. The selector identifies another method implemented by the responder to handle the single-tap gesture; the second parameter is anNSValueorNSDictionaryobject that holds some state of theUITouchobject; the delay is some reasonable interval between a single- and a double-tap gesture.Note: Because a touch object is mutated as it proceeds through a multitouch sequence, you cannot retain a touch and assume that its state remains the same. (And you cannot copy a touch object because

UITouchdoes not adopt theNSCopyingprotocol.) Thus if you want to preserve the state of a touch object, you should store those bits of state in aNSValueobject, a dictionary, or a similar object. (The code in Listing 2-2 stores the location of the touch in a dictionary but does not use it; this code is included for purposes of illustration.)In

touchesBegan:withEvent:, if the tap count is two, the responder object cancels the pending delayed-perform invocation by calling thecancelPreviousPerformRequestsWithTarget:method ofNSObject, passing itself as the argument. If the tap count is not two, the method identified by the selector in the previous step for single-tap gestures is invoked after the delay.In

touchesEnded:withEvent:, if the tap count is two, the responder performs the actions necessary for handling double-tap gestures.

Listing 2-2 Handling a single-tap gesture and a double-tap gesture

- (void)touchesBegan:(NSSet *)touches withEvent:(UIEvent *)event { |

UITouch *aTouch = [touches anyObject]; |

if (aTouch.tapCount == 2) { |

[NSObject cancelPreviousPerformRequestsWithTarget:self]; |

} |

} |

- (void)touchesMoved:(NSSet *)touches withEvent:(UIEvent *)event { |

} |

- (void)touchesEnded:(NSSet *)touches withEvent:(UIEvent *)event { |

UITouch *theTouch = [touches anyObject]; |

if (theTouch.tapCount == 1) { |

NSDictionary *touchLoc = [NSDictionary dictionaryWithObject: |

[NSValue valueWithCGPoint:[theTouch locationInView:self]] forKey:@"location"]; |

[self performSelector:@selector(handleSingleTap:) withObject:touchLoc afterDelay:0.3]; |

} else if (theTouch.tapCount == 2) { |

// Double-tap: increase image size by 10%" |

CGRect myFrame = self.frame; |

myFrame.size.width += self.frame.size.width * 0.1; |

myFrame.size.height += self.frame.size.height * 0.1; |

myFrame.origin.x -= (self.frame.origin.x * 0.1) / 2.0; |

myFrame.origin.y -= (self.frame.origin.y * 0.1) / 2.0; |

[UIView beginAnimations:nil context:NULL]; |

[self setFrame:myFrame]; |

[UIView commitAnimations]; |

} |

} |

- (void)handleSingleTap:(NSDictionary *)touches { |

// Single-tap: decrease image size by 10%" |

CGRect myFrame = self.frame; |

myFrame.size.width -= self.frame.size.width * 0.1; |

myFrame.size.height -= self.frame.size.height * 0.1; |

myFrame.origin.x += (self.frame.origin.x * 0.1) / 2.0; |

myFrame.origin.y += (self.frame.origin.y * 0.1) / 2.0; |

[UIView beginAnimations:nil context:NULL]; |

[self setFrame:myFrame]; |

[UIView commitAnimations]; |

} |

- (void)touchesCancelled:(NSSet *)touches withEvent:(UIEvent *)event { |

/* no state to clean up, so null implementation */ |

} |

Handling Swipe and Drag Gestures

Horizontal and vertical swipes are a simple type of gesture that you can track easily from your own code and use to perform actions. To detect a swipe gesture, you have to track the movement of the user’s finger along the desired axis of motion, but it is up to you to determine what constitutes a swipe. In other words, you need to determine whether the user’s finger moved far enough, if it moved in a straight enough line, and if it went fast enough. You do that by storing the initial touch location and comparing it to the location reported by subsequent touch-moved events.

Listing 2-3 shows some basic tracking methods you could use to detect horizontal swipes in a view. In this example, the view stores the initial location of the touch in a startTouchPosition instance variable. As the user’s finger moves, the code compares the current touch location to the starting location to determine whether it is a swipe. If the touch moves too far vertically, it is not considered to be a swipe and is processed differently. If it continues along its horizontal trajectory, however, the code continues processing the event as if it were a swipe. The processing routines could then trigger an action once the swipe had progressed far enough horizontally to be considered a complete gesture. To detect swipe gestures in the vertical direction, you would use similar code but would swap the x and y components.

Listing 2-3 Tracking a swipe gesture in a view

#define HORIZ_SWIPE_DRAG_MIN 12 |

#define VERT_SWIPE_DRAG_MAX 4 |

- (void)touchesBegan:(NSSet *)touches withEvent:(UIEvent *)event { |

UITouch *touch = [touches anyObject]; |

// startTouchPosition is an instance variable |

startTouchPosition = [touch locationInView:self]; |

} |

- (void)touchesMoved:(NSSet *)touches withEvent:(UIEvent *)event { |

UITouch *touch = [touches anyObject]; |

CGPoint currentTouchPosition = [touch locationInView:self]; |

// To be a swipe, direction of touch must be horizontal and long enough. |

if (fabsf(startTouchPosition.x - currentTouchPosition.x) >= HORIZ_SWIPE_DRAG_MIN && |

fabsf(startTouchPosition.y - currentTouchPosition.y) <= VERT_SWIPE_DRAG_MAX) |

{ |

// It appears to be a swipe. |

if (startTouchPosition.x < currentTouchPosition.x) |

[self myProcessRightSwipe:touches withEvent:event]; |

else |

[self myProcessLeftSwipe:touches withEvent:event]; |

} |

} |

- (void)touchesEnded:(NSSet *)touches withEvent:(UIEvent *)event { |

startTouchPosition = CGPointZero; |

} |

- (void)touchesCancelled:(NSSet *)touches withEvent:(UIEvent *)event { |

startTouchPosition = CGPointZero; |

} |

Listing 2-4 shows an even simpler implementation of tracking a single touch, but this time for the purposes of dragging the receiving view around the screen. In this instance, the responder class fully implements only the touchesMoved:withEvent: method, and in this method computes a delta value between the touch's current location in the view and its previous location in the view. It then uses this delta value to reset the origin of the view’s frame.

Listing 2-4 Dragging a view using a single touch

- (void)touchesBegan:(NSSet *)touches withEvent:(UIEvent *)event { |

} |

- (void)touchesMoved:(NSSet *)touches withEvent:(UIEvent *)event { |

UITouch *aTouch = [touches anyObject]; |

CGPoint loc = [aTouch locationInView:self]; |

CGPoint prevloc = [aTouch previousLocationInView:self]; |

CGRect myFrame = self.frame; |

float deltaX = loc.x - prevloc.x; |

float deltaY = loc.y - prevloc.y; |

myFrame.origin.x += deltaX; |

myFrame.origin.y += deltaY; |

[self setFrame:myFrame]; |

} |

- (void)touchesEnded:(NSSet *)touches withEvent:(UIEvent *)event { |

} |

- (void)touchesCancelled:(NSSet *)touches withEvent:(UIEvent *)event { |

} |

Handling a Complex Multitouch Sequence

Taps, drags, and swipes are simple gestures, typically involving only a single touch. Handling a touch event consisting of two or more touches is a more complicated affair. You may have to track all touches through all phases, recording the touch attributes that have changed and altering internal state appropriately. There are a couple of things you should do when tracking and handling multiple touches:

Set the

multipleTouchEnabledproperty of the view toYES.Use a Core Foundation dictionary object (

CFDictionaryRef) to track the mutations of touches through their phases during the event.

When handling an event with multiple touches, you often store initial bits of each touch’s state for later comparison with the mutated UITouch instance. As an example, say you want to compare the final location of each touch with its original location. In the touchesBegan:withEvent: method, you can obtain the original location of each touch from the locationInView: method and store those in a CFDictionaryRef object using the addresses of the UITouch objects as keys. Then, in the touchesEnded:withEvent: method you can use the address of each passed-in UITouch object to obtain the object’s original location and compare that with its current location. (You should use a CFDictionaryRef type rather than an NSDictionaryobject; the latter copies its keys, but the UITouch class does not adopt the NSCopying protocol, which is required for object copying.)

Listing 2-5 illustrates how you might store beginning locations of UITouch objects in a Core Foundation dictionary.

Listing 2-5 Storing the beginning locations of multiple touches

- (void)cacheBeginPointForTouches:(NSSet *)touches |

{ |

if ([touches count] > 0) { |

for (UITouch *touch in touches) { |

CGPoint *point = (CGPoint *)CFDictionaryGetValue(touchBeginPoints, touch); |

if (point == NULL) { |

point = (CGPoint *)malloc(sizeof(CGPoint)); |

CFDictionarySetValue(touchBeginPoints, touch, point); |

} |

*point = [touch locationInView:view.superview]; |

} |

} |

} |

Listing 2-6 illustrates how to retrieve those initial locations stored in the dictionary. It also gets the current locations of the same touches. It uses these values in computing an affine transformation (not shown).

Listing 2-6 Retrieving the initial locations of touch objects

- (CGAffineTransform)incrementalTransformWithTouches:(NSSet *)touches { |

NSArray *sortedTouches = [[touches allObjects] sortedArrayUsingSelector:@selector(compareAddress:)]; |

// other code here ... |

UITouch *touch1 = [sortedTouches objectAtIndex:0]; |

UITouch *touch2 = [sortedTouches objectAtIndex:1]; |

CGPoint beginPoint1 = *(CGPoint *)CFDictionaryGetValue(touchBeginPoints, touch1); |

CGPoint currentPoint1 = [touch1 locationInView:view.superview]; |

CGPoint beginPoint2 = *(CGPoint *)CFDictionaryGetValue(touchBeginPoints, touch2); |

CGPoint currentPoint2 = [touch2 locationInView:view.superview]; |

// compute the affine transform... |

} |

Although the code example in Listing 2-7 doesn’t use a dictionary to track touch mutations, it also handles multiple touches during an event. It shows a custom UIView object responding to touches by animating the movement of a “Welcome” placard around the screen as a finger moves it and changing the language of the welcome when the user makes a double-tap gesture. (The code in this example comes from the MoveMe sample code project, which you can examine to get a better understanding of the event-handling context.)

Listing 2-7 Handling a complex multitouch sequence

- (void)touchesBegan:(NSSet *)touches withEvent:(UIEvent *)event { |

UITouch *touch = [[event allTouches] anyObject]; |

// Only move the placard view if the touch was in the placard view |

if ([touch view] != placardView) { |

// On double tap outside placard view, update placard's display string |

if ([touch tapCount] == 2) { |

[placardView setupNextDisplayString]; |

} |

return; |

} |

// "Pulse" the placard view by scaling up then down |

// Use UIView's built-in animation |

[UIView beginAnimations:nil context:NULL]; |

[UIView setAnimationDuration:0.5]; |

CGAffineTransform transform = CGAffineTransformMakeScale(1.2, 1.2); |

placardView.transform = transform; |

[UIView commitAnimations]; |

[UIView beginAnimations:nil context:NULL]; |

[UIView setAnimationDuration:0.5]; |

transform = CGAffineTransformMakeScale(1.1, 1.1); |

placardView.transform = transform; |

[UIView commitAnimations]; |

// Move the placardView to under the touch |

[UIView beginAnimations:nil context:NULL]; |

[UIView setAnimationDuration:0.25]; |

placardView.center = [self convertPoint:[touch locationInView:self] fromView:placardView]; |

[UIView commitAnimations]; |

} |

- (void)touchesMoved:(NSSet *)touches withEvent:(UIEvent *)event { |

UITouch *touch = [[event allTouches] anyObject]; |

// If the touch was in the placardView, move the placardView to its location |

if ([touch view] == placardView) { |

CGPoint location = [touch locationInView:self]; |

location = [self convertPoint:location fromView:placardView]; |

placardView.center = location; |

return; |

} |

} |

- (void)touchesEnded:(NSSet *)touches withEvent:(UIEvent *)event { |

UITouch *touch = [[event allTouches] anyObject]; |

// If the touch was in the placardView, bounce it back to the center |

if ([touch view] == placardView) { |

// Disable user interaction so subsequent touches don't interfere with animation |

self.userInteractionEnabled = NO; |

[self animatePlacardViewToCenter]; |

return; |

} |

} |

Note: Custom views that redraw themselves in response to events they handle generally should only set drawing state in the event-handling methods and perform all of the drawing in the drawRect: method. To learn more about drawing view content, see View Programming Guide for iOS.

To find out when the last finger in a multitouch sequence is lifted from a view, compare the number of UITouch objects in the passed-in set with the number of touches for the view maintained by the passed-in UIEvent object. If they are the same, then the multitouch sequence has concluded. Listing 2-8 illustrates how to do this in code.

Listing 2-8 Determining when the last touch in a multitouch sequence has ended

- (void)touchesEnded:(NSSet*)touches withEvent:(UIEvent*)event { |

if ([touches count] == [[event touchesForView:self] count]) { |

// last finger has lifted.... |

} |

} |

Remember that the passed-in set contains all touch objects associated with the receiving view that are new or changed for the given phase whereas the touch objects returned from touchesForView: includes all objects associated with the specified view.

Hit-Testing

Your custom responder can use hit-testing to find the subview or sublayer of itself that is "under” a touch, and then handle the event appropriately. It does this by either calling the hitTest:withEvent: method of UIView or the hitTest: method of CALayer; or it can override one of these methods. Responders sometimes perform hit-testing prior to event forwarding (see “Forwarding Touch Events”).

If you have a custom view with subviews, you need to determine whether you want to handle touches at the subview level or the superview level. If the subviews do not handle touches by implementing touchesBegan:withEvent:, touchesEnded:withEvent:, or touchesMoved:withEvent:, then these messages propagate up the responder chain to the superview. However, because multiple taps and multiple touches are associated with the subviews where they first occurred, the superview won’t receive these touches. To ensure reception of all kinds of touches, the superview should override hitTest:withEvent: to return itself rather than any of its subviews.

The example in Listing 2-9 detects when an “Info” image in a layer of the custom view is tapped.

Listing 2-9 Calling hitTest: on a view’s CALayer object

- (void)touchesEnded:(NSSet*)touches withEvent:(UIEvent*)event { |

CGPoint location = [[touches anyObject] locationInView:self]; |

CALayer *hitLayer = [[self layer] hitTest:[self convertPoint:location fromView:nil]]; |

if (hitLayer == infoImage) { |

[self displayInfo]; |

} |

} |

In Listing 2-10, a responder subclass (in this case, a subclass of UIWindow) overrides hitTest:withEvent:. It first gets the hit-test view returned by the superclass. Then, if that view is itself, it substitutes the view that is furthest down the view hierarchy.

Listing 2-10 Overriding hitTest:withEvent:

- (UIView*)hitTest:(CGPoint)point withEvent:(UIEvent *)event { |

UIView *hitView = [super hitTest:point withEvent:event]; |

if (hitView == self) |

return [[self subviews] lastObject]; |

else |

return hitView; |

} |

Forwarding Touch Events

Event forwarding is a technique used by some applications. You forward touch events by invoking the event-handling methods of another responder object. Although this can be an effective technique, you should use it with caution. The classes of the UIKit framework are not designed to receive touches that are not bound to them; in programmatic terms, this means that the view property of the UITouch object must hold a reference to the framework object in order for the touch to be handled. If you want to conditionally forward touches to other responders in your application, all of these responders should be instances of your own subclasses of UIView.

For example, let’s say an application has three custom views: A, B, and C. When the user touches view A, the application’s window determines that it is the hit-test view and sends the initial touch event to it. Depending on certain conditions, view A forwards the event to either view B or view C. In this case, views A, B, and C must be aware that this forwarding is going on, and views B and C must be able to deal with touches that are not bound to them.

Event forwarding often requires analysis of touch objects to determine where they should be forwarded. There are several approaches you can take for this analysis:

With an “overlay” view (such as a common superview), use hit-testing to intercept events for analysis prior to forwarding them to subviews (see “Hit-Testing”).

Override

sendEvent:in a custom subclass ofUIWindow, analyze touches, and forward them to the appropriate responders. In your implementation you should always invoke the superclass implementation ofsendEvent:.Design your application so that touch analysis isn’t necessary

Listing 2-11 illustrates the second technique, that of overriding sendEvent: in a subclass of UIWindow. In this example, the object to which touch events are forwarded is a custom “helper” responder that performs affine transformations on the view that is associated with.

Listing 2-11 Forwarding touch events to “helper” responder objects

- (void)sendEvent:(UIEvent *)event |

{ |

for (TransformGesture *gesture in transformGestures) { |

// collect all the touches we care about from the event |

NSSet *touches = [gesture observedTouchesForEvent:event]; |

NSMutableSet *began = nil; |

NSMutableSet *moved = nil; |

NSMutableSet *ended = nil; |

NSMutableSet *cancelled = nil; |

// sort the touches by phase so we can handle them similarly to normal event dispatch |

for(UITouch *touch in touches) { |

switch ([touch phase]) { |

case UITouchPhaseBegan: |

if (!began) began = [NSMutableSet set]; |

[began addObject:touch]; |

break; |

case UITouchPhaseMoved: |

if (!moved) moved = [NSMutableSet set]; |

[moved addObject:touch]; |

break; |

case UITouchPhaseEnded: |

if (!ended) ended = [NSMutableSet set]; |

[ended addObject:touch]; |

break; |

case UITouchPhaseCancelled: |

if (!cancelled) cancelled = [NSMutableSet set]; |

[cancelled addObject:touch]; |

break; |

default: |

break; |

} |

} |

// call our methods to handle the touches |

if (began) [gesture touchesBegan:began withEvent:event]; |

if (moved) [gesture touchesMoved:moved withEvent:event]; |

if (ended) [gesture touchesEnded:ended withEvent:event]; |

if (cancelled) [gesture touchesCancelled:cancelled withEvent:event]; |

} |

[super sendEvent:event]; |

} |

Notice that in this example that the overriding subclass does something important to the integrity of the touch-event stream: It invokes the superclass implementation of sendEvent:.

Handling Events in Subclasses of UIKit Views and Controls

If you subclass a view or control class of the UIKit framework (for example, UIImageView or UISwitch) for the purpose of altering or extending event-handling behavior, you should keep the following points in mind:

Unlike in a custom view, it is not necessary to override each event-handling method.

Always invoke the superclass implementation of each event-handling method that you do override.

Do not forward events to UIKit framework objects.

Best Practices for Handling Multitouch Events

When handling events, both touch events and motion events, there are a few recommended techniques and patterns you should follow.

Always implement the event-cancellation methods.

In your implementation, you should restore the state of the view to what it was before the current multitouch sequence, freeing any transient resources set up for handling the event. If you don’t implement the cancellation method your view could be left in an inconsistent state. In some cases, another view might receive the cancellation message.

If you handle events in a subclass of

UIView,UIViewController, or (in rare cases)UIResponder,You should implement all of the event-handling methods (even if it is a null implementation).

Do not call the superclass implementation of the methods.

If you handle events in a subclass of any other UIKit responder class,

You do not have to implement all of the event-handling methods.

But in the methods you do implement, be sure to call the superclass implementation. For example,

[super touchesBegan:theTouches withEvent:theEvent];

Do not forward events to other responder objects of the UIKit framework.

The responders that you forward events to should be instances of your own subclasses of

UIView, and all of these objects must be aware that event-forwarding is taking place and that, in the case of touch events, they may receive touches that are not bound to them.Custom views that redraw themselves in response to events should only set drawing state in the event-handling methods and perform all of the drawing in the

drawRect:method.Do not explicitly send events up the responder (via

nextResponder); instead, invoke the superclass implementation and let the UIKit handle responder-chain traversal.

Last updated: 2010-08-03