Working with OpenGL ES Contexts and Framebuffers

Any OpenGL ES implementation provides a platform-specific library that includes functions to create and manipulate a rendering context. The rendering context maintains a copy of all OpenGL ES state variables and accepts and executes all OpenGL ES commands. In iOS, EAGL is the library that provides this functionality. An EAGL context (EAGLContext) is a rendering context, executing OpenGL ES commands and interacting with Core Animation to present the final images to the user. An EAGL sharegroup (EAGLSharegroup) extends the rendering context by allowing multiple rendering contexts to share OpenGL ES objects. In iOS, you might use a sharegroup to share objects to conserve memory by sharing textures and other expensive resources.

Creating an EAGL Context

Before your application can execute any OpenGL ES commands, it must first create and initialize an EAGL context and make it the current context.

EAGLContext* myContext = [[EAGLContext alloc] initWithAPI:kEAGLRenderingAPIOpenGLES1]; |

[EAGLContext setCurrentContext: myContext]; |

When your application initializes the context, it chooses which version of OpenGL ES to use for that context. For more information on choosing a version of OpenGL ES to use, see “Which Version Should I Target?.”

Each thread in your application maintains a pointer to a current rendering context. When your application makes a context current, EAGL releases any previous context, retains the context object and sets it as the target for future OpenGL ES rendering commands. Note that in many cases, creating multiple rendering contexts may be unnecessary. You can often accomplish the same results using a single rendering context and one framebuffer object for each image you need to render.

Creating Framebuffer Objects

Although an EAGL context receives commands, it is not the ultimate target of those commands. Your application provides a destination to render the pixels into. In iOS, all images are rendered to framebuffer objects. Framebuffer objects are provided by all OpenGL ES 2.0 implementations, and Apple also provides them in all implementations of OpenGL ES 1.1 through the GL_OES_framebuffer_object extension. Framebuffer objects allow an application to precisely control the creation of color, depth, and stencil targets. Most of the time, these targets are known as renderbuffers, which is just a 2D image of pixels with a height, width, and format. Further, the color target may also be used to point at a texture.

The procedure to create a framebuffer is similar in both cases:

Create a framebuffer object.

Create one or more targets (renderbuffers or textures), allocate storage for them, and attach them to the framebuffer object.

Test the framebuffer for completeness.

The following sections explore these concepts in more detail.

Offscreen Framebuffer Objects

An offscreen framebuffer uses an OpenGL ES renderbuffer to hold the rendered image.

The following code allocates a complete offscreen framebuffer object on OpenGL ES 1.1. An OpenGL ES 2.0 application would omit the OES suffix.

Create the framebuffer and bind it so that future OpenGL ES framebuffer commands are directed to it.

GLuint framebuffer;

glGenFramebuffersOES(1, &framebuffer);

glBindFramebufferOES(GL_FRAMEBUFFER_OES, framebuffer);

Create a color renderbuffer, allocate storage for it, and attach it to the framebuffer.

GLuint colorRenderbuffer;

glGenRenderbuffersOES(1, &colorRenderbuffer);

glBindRenderbufferOES(GL_RENDERBUFFER_OES, colorRenderbuffer);

glRenderbufferStorageOES(GL_RENDERBUFFER_OES, GL_RGBA8_OES, width, height);

glFramebufferRenderbufferOES(GL_FRAMEBUFFER_OES, GL_COLOR_ATTACHMENT0_OES, GL_RENDERBUFFER_OES, colorRenderbuffer);

Perform similar steps to create and attach a depth renderbuffer.

GLuint depthRenderbuffer;

glGenRenderbuffersOES(1, &depthRenderbuffer);

glBindRenderbufferOES(GL_RENDERBUFFER_OES, depthRenderbuffer);

glRenderbufferStorageOES(GL_RENDERBUFFER_OES, GL_DEPTH_COMPONENT16_OES, width, height);

glFramebufferRenderbufferOES(GL_FRAMEBUFFER_OES, GL_DEPTH_ATTACHMENT_OES, GL_RENDERBUFFER_OES, depthRenderbuffer);

Test the framebuffer for completeness.

GLenum status = glCheckFramebufferStatusOES(GL_FRAMEBUFFER_OES) ;

if(status != GL_FRAMEBUFFER_COMPLETE_OES) {NSLog(@"failed to make complete framebuffer object %x", status);

}

Using Framebuffer Objects to Render to a Texture

Your application might want to render directly into a texture and use it as a source for later drawing. For example, you could use this to render a reflection in a mirror that could then be composited into your scene. The code to create this framebuffer is almost identical to the offscreen example, except that this time a texture is attached to the color attachment point.

Create the framebuffer object.

GLuint framebuffer;

glGenFramebuffersOES(1, &framebuffer);

glBindFramebufferOES(GL_FRAMEBUFFER_OES, framebuffer);

Create a texture to hold the color data.

// create the texture

GLuint texture;

glGenTextures(1, &texture);

glBindTexture(GL_TEXTURE_2D, texture);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA8, width, height, 0, GL_RGBA, GL_UNSIGNED_BYTE, NULL);

Attach the texture to the framebuffer.

glFramebufferTexture2DOES(GL_FRAMEBUFFER_OES, GL_COLOR_ATTACHMENT0_OES, GL_TEXTURE_2D, texture, 0);

Allocate and attach a depth buffer.

GLuint depthRenderbuffer;

glGenRenderbuffersOES(1, &depthRenderbuffer);

glBindRenderbufferOES(GL_RENDERBUFFER_OES, depthRenderbuffer);

glRenderbufferStorageOES(GL_RENDERBUFFER_OES, GL_DEPTH_COMPONENT16_OES, width, height);

glFramebufferRenderbufferOES(GL_FRAMEBUFFER_OES, GL_DEPTH_ATTACHMENT_OES, GL_RENDERBUFFER_OES, depthRenderbuffer);

Test the framebuffer

GLenum status = glCheckFramebufferStatusOES(GL_FRAMEBUFFER_OES) ;

if(status != GL_FRAMEBUFFER_COMPLETE_OES) {NSLog(@"failed to make complete framebuffer object %x", status);

}

Drawing to the Screen

Although both offscreen targets and textures are interesting, neither can display their pixels to the screen. To do that, your application needs to interact with Core Animation.

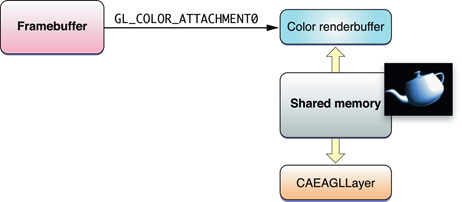

In iOS, all UIView objects are backed by Core Animation layers. In order for your application to present OpenGL ES content to the screen, your application needs a UIView object as the target. Further, that view must be backed by a special Core Animation layer, a CAEAGLLayer object. A CAEAGLLayer object is aware of OpenGL ES and references a renderbuffer, as shown in Figure 3-1. When your application wants to display these results, the contents of this renderbuffer are animated and composited with other Core Animation layers and sent to the screen.

The OpenGL ES template provided by Xcode does this work for you, but it is illustrative to walk through the steps used to create a framebuffer object that can be displayed to the screen.

Subclass

UIViewand set up a view for your iPhone application.Override the

layerClassmethod of theUIViewclass so that objects of your view class create and initialize aCAEAGLLayerobject rather than aCALayerobject.+ (Class) layerClass

{return [CAEAGLLayer class];

}

Get the layer associated with the view by calling the

layermethod ofUIView.myEAGLLayer = (CAEAGLLayer*)self.layer;

Set the layer properties.

For optimal performance, it is recommended that you mark the layer as opaque by setting the

opaqueproperty provided by theCALayerclass. See “Displaying Your Results.”Optionally configure the surface properties of the rendering surface by assigning a new dictionary of values to the

drawablePropertiesproperty of theCAEAGLLayerobject.EAGL allows you to specify the format of rendered pixels and whether or not the renderbuffer retains its contents after they are presented to the screen. You identify these properties in the dictionary using the

kEAGLDrawablePropertyColorFormatandkEAGLDrawablePropertyRetainedBackingkeys. For a list of the keys you can set, see EAGLDrawable Protocol Reference.Create the framebuffer, as before.

GLuint framebuffer;

glGenFramebuffersOES(1, &framebuffer);

glBindFramebufferOES(GL_FRAMEBUFFER_OES, framebuffer);

Create the color renderbuffer and call the rendering context to allocate the storage on our Core Animation layer. The width, height, and format of the renderbuffer storage are derived from the bounds and properties of the

CAEAGLLayerobject at the moment therenderbufferStorage:fromDrawable:method is called.GLuint colorRenderbuffer;

glGenRenderbuffersOES(1, &colorRenderbuffer);

glBindRenderbufferOES(GL_RENDERBUFFER_OES, colorRenderbuffer);

[myContext renderbufferStorage:GL_RENDERBUFFER_OES fromDrawable:myEAGLLayer];

glFramebufferRenderbufferOES(GL_FRAMEBUFFER_OES, GL_COLOR_ATTACHMENT0_OES, GL_RENDERBUFFER_OES, colorRenderbuffer);

If the Core Animation layer’s properties changes, your application should reallocate the renderbuffer by calling

renderbufferStorage:fromDrawable:again. Failure to do so can result in the rendered image being scaled or transformed for display, which may incur a significant performance cost. For example, in the template, the framebuffer and renderbuffer objects are destroyed and recreated whenever the bounds of theCAEAGLLayerobject change.Retrieve the height and width of the color renderbuffer.

GLint width;

GLint height;

glGetRenderbufferParameterivOES(GL_RENDERBUFFER_OES, GL_RENDERBUFFER_WIDTH_OES, &width);

glGetRenderbufferParameterivOES(GL_RENDERBUFFER_OES, GL_RENDERBUFFER_HEIGHT_OES, &height);

Allocate and attach the depth buffer.

GLuint depthRenderbuffer;

glGenRenderbuffersOES(1, &depthRenderbuffer);

glBindRenderbufferOES(GL_RENDERBUFFER_OES, depthRenderbuffer);

glRenderbufferStorageOES(GL_RENDERBUFFER_OES, GL_DEPTH_COMPONENT16_OES, width, height);

glFramebufferRenderbufferOES(GL_FRAMEBUFFER_OES, GL_DEPTH_ATTACHMENT_OES, GL_RENDERBUFFER_OES, depthRenderbuffer);

Test the framebuffer object.

GLenum status = glCheckFramebufferStatusOES(GL_FRAMEBUFFER_OES) ;

if(status != GL_FRAMEBUFFER_COMPLETE_OES) {NSLog(@"failed to make complete framebuffer object %x", status);

}

To reiterate, the procedure to create a framebuffer object is similar in all three cases, differing only in how you allocate the object attached to the color attachment point of the framebuffer object.

Offscreen renderbuffer |

|

Drawable renderbuffer |

|

Texture |

|

Drawing to a Framebuffer Object

Once you’ve allocated a framebuffer object, you can render to it. All rendering is targeted at the currently bound framebuffer.

glBindFramebufferOES(GL_FRAMEBUFFER_OES, framebuffer); |

Displaying Your Results

Assuming you allocated a color renderbuffer to point at a Core Animation layer, you present its contents by making it the current renderbuffer and calling the presentRenderbuffer: method on your rendering context.

glBindRenderbufferOES(GL_RENDERBUFFER_OES, colorRenderbuffer); |

[context presentRenderbuffer:GL_RENDERBUFFER_OES]; |

By default, the contents of the renderbuffer are invalidated after it is presented to the screen. Your application must completely recreate the contents of the renderbuffer every time you draw a frame. If your application needs to preserve the contents between frames, it should add the kEAGLDrawablePropertyRetainedBacking key to the dictionary stored in the drawableProperties property of the CAEAGLLayer object. Retaining the contents of the layer may require additional memory to be allocated, which can reduce your application’s performance.

When the renderbuffer is presented to the screen, it is animated and composited with any other Core Animation layers visible on the screen, regardless of whether those layers were drawn with OpenGL ES, Quartz, or another graphics library. Mixing OpenGL ES content with other content comes at a performance penalty. For best performance, your application should rely solely on OpenGL ES to render your content. To do this, create a screen-sized CAEAGLLayer object, set the opaque property to YES, and ensure that no other Core Animation layers or views are visible.

If you must composite OpenGL ES content with other layers, making your CAEAGLLayer object opaque reduces, but doesn’t eliminate, the performance penalty.

If your CAEAGLLayer object is blended with other layers, Core Animation incurs a significant performance penalty. You can reduce this penalty by playing your layer behind other UIKit layers.

Note: If you must blend transparent OpenGL ES content, your renderbuffer must provide a buffer with a premultiplied alpha to be composited correctly by Core Animation.

Finally, it is almost never necessary to apply Core Animation transforms to the CAEAGLLayer object and doing so adds to the processing Core Animation must do before displaying your content. Your application can often perform the same tasks by changing the modelview and projection matrices (or the equivalents in your vertex shader), and swapping the width and height arguments to the glViewport and glScissor functions.

Sharegroups

An EAGLSharegroup object manages OpenGL ES resources associated with one or more EAGLContext objects. A sharegroup is usually created when an EAGLContext object is initialized and disposed of when the last EAGLContext object that references it is released. As an opaque object, there is no developer-accessible API.

To create multiple contexts using a single sharegroup, your application first creates a context as before, then creates one or more additional contexts using the initWithAPI:sharegroup: initializer.

EAGLContext* firstContext = [[EAGLContext alloc] initWithAPI:kEAGLRenderingAPIOpenGLES1]; |

EAGLContext* secondContext = [[EAGLContext alloc] initWithAPI:kEAGLRenderingAPIOpenGLES1 sharegroup: [firstContext sharegroup]]; |

Sharegroups manage textures, buffers, framebuffers, and renderbuffers. It is your application’s responsibility to manage state changes to shared objects when those objects are accessed from multiple contexts in the sharegroup. The results of changing the state of a shared object while it is being used for rendering in another context are undefined. To obtain deterministic results, your application must take explicit steps to ensure that the shared object is not currently being used for rendering when your application modifies it. Further, state changes are not guaranteed to be noticed by another context in the sharegroup until that context rebinds the shared object.

To ensure defined results of state changes to shared objects across contexts in the sharegroup, your application must perform the following tasks, in this order:

Change the state of an object.

Call

glFlushon the rendering context that issues the state-modifying routines.Each context must rebind the object to see the changes.

The original object is deleted after all contexts in the sharegroup have bound the new object.

Last updated: 2010-07-09